Rows: 263

Columns: 29

$ ID <dbl> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16,…

$ Longitude <dbl> 89.1134, 89.1232, 89.1281, 89.1296, 89.1312, 89.1305, …

$ Latitude <dbl> 22.7544, 22.7576, 22.7499, 22.7568, 22.7366, 22.7297, …

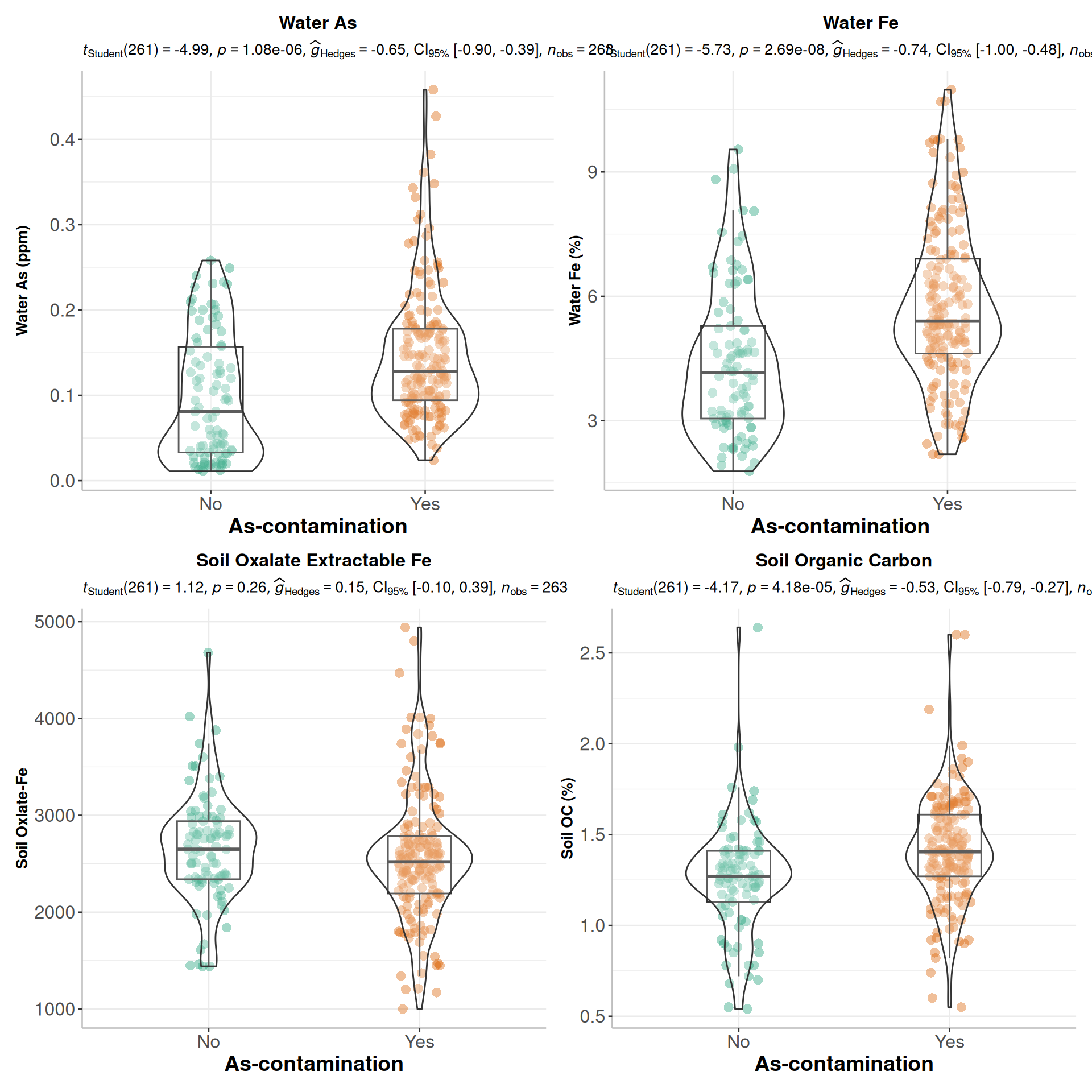

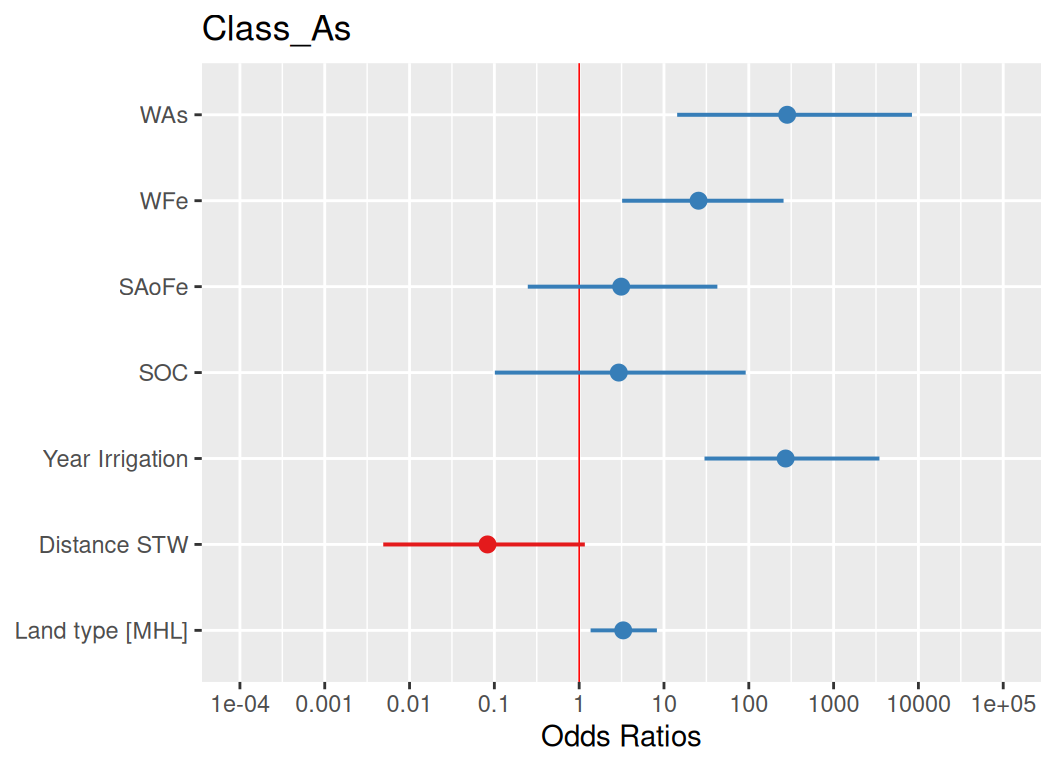

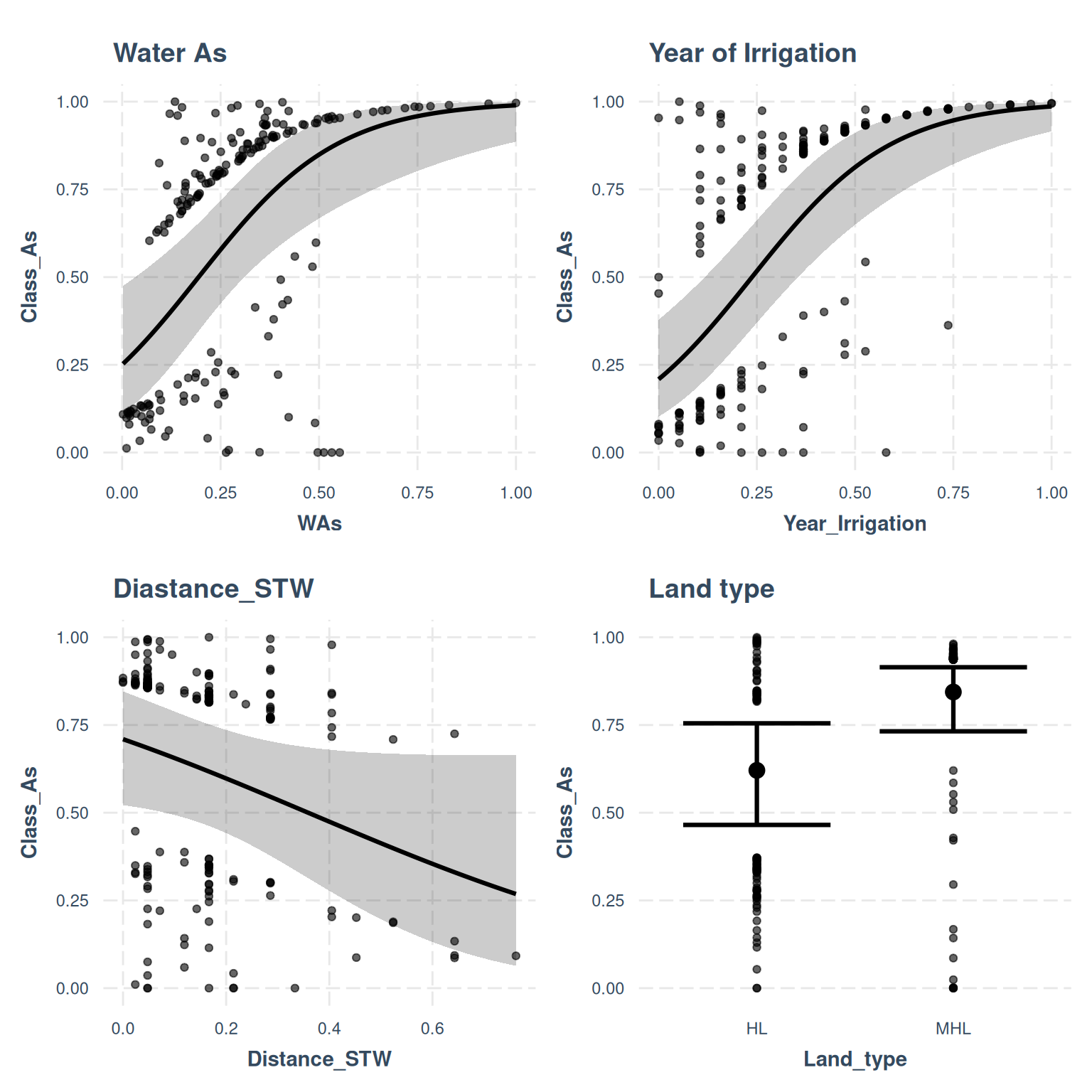

$ WAs <dbl> 0.059, 0.059, 0.079, 0.122, 0.072, 0.042, 0.075, 0.064…

$ WP <dbl> 0.761, 1.194, 1.317, 1.545, 0.966, 1.058, 0.868, 0.890…

$ WFe <dbl> 3.44, 4.93, 9.70, 8.58, 4.78, 6.95, 7.81, 8.14, 8.99, …

$ WEc <dbl> 1.03, 1.07, 1.40, 0.83, 1.42, 1.82, 1.71, 1.74, 1.57, …

$ WpH <dbl> 7.03, 7.06, 6.84, 6.85, 6.95, 6.89, 6.86, 6.98, 6.82, …

$ WMg <dbl> 33.9, 34.1, 40.5, 28.4, 43.4, 43.2, 50.1, 51.5, 48.2, …

$ WNa <dbl> 69.4, 74.6, 89.4, 22.8, 93.0, 165.7, 110.5, 127.0, 96.…

$ WCa <dbl> 99.1, 94.3, 133.9, 103.8, 130.5, 153.3, 172.0, 163.2, …

$ WK <dbl> 5.3, 5.7, 6.0, 4.0, 5.3, 6.5, 5.9, 6.4, 6.5, 3.7, 4.4,…

$ WS <dbl> 2.936, 2.826, 2.307, 1.012, 2.511, 2.764, 2.792, 1.562…

$ SAs <dbl> 29.10, 45.10, 23.20, 23.80, 26.00, 25.60, 26.30, 31.60…

$ SPAs <dbl> 9.890, 10.700, 5.869, 6.031, 6.627, 9.530, 6.708, 8.63…

$ SAoAs <dbl> 13.280, 21.900, 12.266, 12.596, 13.805, 13.585, 13.969…

$ SAoFe <dbl> 2500, 2670, 2160, 2500, 2060, 2500, 2520, 2140, 2150, …

$ SpH <dbl> 7.74, 7.87, 8.03, 8.07, 7.81, 7.77, 7.66, 7.89, 8.00, …

$ SEc <dbl> 1.128, 1.021, 1.257, 1.067, 1.354, 1.442, 1.428, 1.385…

$ SOC <dbl> 1.66, 1.26, 1.36, 1.61, 1.26, 1.74, 1.71, 1.69, 1.41, …

$ SP <dbl> 13.79, 15.31, 15.54, 16.28, 14.20, 13.41, 13.26, 14.84…

$ Sand <dbl> 16.3, 11.1, 12.3, 12.7, 12.1, 16.7, 16.8, 13.7, 12.6, …

$ Silt <dbl> 44.8, 48.7, 46.4, 43.6, 50.9, 43.6, 43.4, 40.8, 44.9, …

$ Clay <dbl> 38.9, 40.2, 41.3, 43.7, 37.1, 39.8, 39.8, 45.5, 42.4, …

$ Elevation <dbl> 3, 5, 4, 3, 5, 2, 2, 3, 3, 3, 2, 5, 6, 6, 5, 5, 4, 6, …

$ Year_Irrigation <dbl> 14, 20, 10, 8, 10, 9, 8, 10, 8, 2, 20, 4, 15, 10, 5, 4…

$ Distance_STW <dbl> 5, 6, 5, 8, 5, 5, 10, 8, 10, 8, 5, 5, 9, 5, 10, 10, 12…

$ Land_type <chr> "MHL", "MHL", "MHL", "MHL", "MHL", "MHL", "MHL", "MHL"…

$ Land_type_ID <dbl> 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 1, 2, 1, 1, 1, 1, 1, …